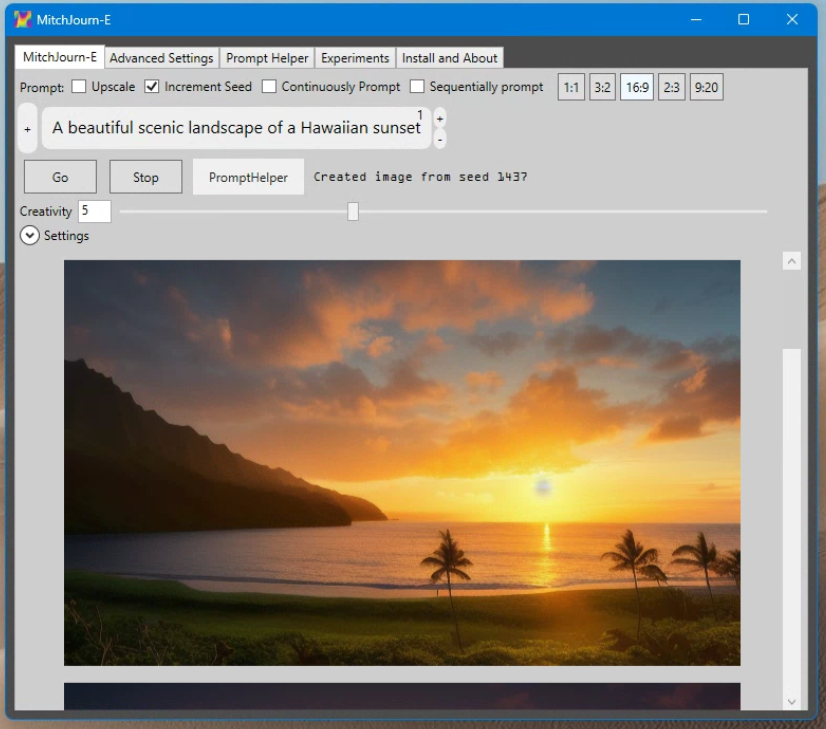

Creating music that truly stands out can be a complex process, but understanding the techniques used by successful producers can make all the difference. We’re going to take a closer look at the production techniques used on GLOWSTONE’s hit track “LIGMA”, examining how the vocal effects were used to create a unique, distorted vibe while still allowing the performance to shine through. We’ll also delve into the backgrounds of the producers behind the track, Sober Rob and Madi Makes Musica, who formed GLOWSTONE as a couple. With a combination of powerful vocals, heavy distortion, and infectious beats, GLOWSTONE makes some great music.

The vocals are the king of a mix, and must be treated as such. Processing the vocals in your track is one of the most important steps to creating music that slaps. So when I’m making decisions of how to process the vocal effects in my tracks, it’s useful to see how great producers handle the task.

Today we are looking at the production techniques of GLOWSTONE’s “LIGMA”. And specifically going into detail on how the vocal effects blend GLOWSTONE’s ‘s voice into the distorted vibe of the track, but also allowing the performance to soar above the mix.

Meet the Producers

LIGMA was produced by Sober Rob and Madi Makes Musica who formed GLOWSTONE as a couple. Both can be found on SoundCloud and Spotify.

Madi who “writ[es] songs about brain :):” and Rob who’s been known as “[…]being [a] boundless creative being[…]” team up to combine great vocals with heavy distortion and amazing beats.

The Effect Chain

Microphone

For vocals to sound good distorted, it should sound good recorded. The technique used in the most distorted sections are captured very close to the source, allowing Madi to whisper into the mic. This is similar to how ASMR might be recorded. When the vocals transition to singing, the mic placement gets a little further back to allow for more dynamic range in the recording. It seems some harmonies are also recorded from even further back, to layer in the vocals during the chorus.

EQ

Equalization, or EQ, is a crucial element in the production process, as it helps shape the sound and balance the frequencies within the song. In Ligma, Sober Rob and Madi Makes Musica have employed various EQ techniques to create a unique sonic landscape that complements the track’s distorted nature while maintaining clarity and distinction between the different elements.

For Madi’s vocals, it’s essential to maintain the intelligibility and character of her voice while still allowing for the heavy distortion. To achieve this, a high-pass filter is applied to remove any unwanted low frequencies that may muddy the mix, usually below 80-100 Hz. A slight boost in the high-mid frequencies, around 2-5 kHz, helps add clarity and presence to her voice. Additionally, a gentle high-shelf boost, starting around 10 kHz, adds some air and sparkle to the vocal performance. This EQ approach ensures the vocals cut through the mix without being too harsh or piercing.

In the final stages of EQing the mix, Sober Rob and Madi Makes Musica would have carefully considered how each element sits together. Any problem frequencies that create harshness, muddiness, or conflict between the elements would have been addressed using surgical EQ techniques, such as notch filtering or narrow boosts and cuts.

By thoughtfully applying these EQ techniques, the producers of Ligma have achieved a well-balanced and cohesive mix that showcases the unique blend of distorted and melodic elements, while allowing Madi’s vocal performance to shine through.

Compression

To create the distorted soundscape, the instrumental backing of the track is heavily compressed, which glues the mix together. Side-chaining is used to help the big 808’s punch through the mix, which ducks the rest of the audio until the kick has finished punching you in the gut. Distorted high hats and trumpet or trombone sounding synths are flaring with punch and compressing down to allow the 808’s back in throughout the bulk of the track, which is a major component of the energy of this song.

Distortion

It seems like every element of this track has some amount of distortion applied. To my ear, it seems like most of the audio has been wave-shaped into loud square waves, bringing lower toned elements into the upper register. Madi’s vocals feature a blend of clean and distorted sections that contribute to the track’s dynamic and emotive quality. The distorted sections are achieved by using saturation, overdrive, or dedicated distortion plugins to add harmonic richness and warmth to her voice. This processing can be applied subtly to enhance the natural harmonics of her voice, or more aggressively to create a gritty and powerful effect that complements the distorted instrumental elements.

The instrumental elements of Ligma heavily feature distortion. This may be achieved by using a variety of distortion techniques, such as analog-style saturation, digital clipping, and waveshaping. Each type of distortion imparts a distinct sonic character that contributes to the overall texture and intensity of the track.

Analog-style saturation, which emulates the sound of overdriven hardware, adds warmth and harmonic richness to the synths and bass. Digital clipping introduces a more aggressive and edgy character, which works well with the track’s heavy and intense atmosphere. Waveshaping, which involves manipulating the waveform of a signal, allows for more creative and experimental distortion effects that can contribute to the track’s unique sound.

It’s essential to balance the levels of distortion in the mix, ensuring that the heavily processed elements do not overpower the more subtle and melodic aspects of the track. This can be achieved through careful gain staging, EQ, and compression, as well as adjusting the blend of wet and dry signals for each processed instrument.

The Song

Get Producing

Take the lessons of LIGMA and apply them to your own vocal effect chains. Personally, I may go further with some distortion in my mixes, but maybe a new compressor plugin is your take-away. Let me know in the comments if you are using any of these techniques in your tracks. Or learn more audio engineering techniques here.

Thanks for reading ✌